As we move into the 23rd year of the 21st century, the world depends on automation, and AI has a prominent role. The latest in line is GPT 4 from Open AI, a multimodal model. That can work by accepting both images and texts. Now, the real catch is, as per Open AI’s reports, upon testing the abilities of GPT-4 at various levels, the particular was able to perform much like humans across both professional and academic benchmarks.

To gain a better understanding of this Large Language Model (LLM) GPT 4, researchers from Microsoft went into testing an early version of the same. In the paper

- The researchers came up with findings indicating enhanced effectiveness of the GPT 4 compared to preceding versions.

- The team also demonstrated how GPT model 4 solved complex tasks in mathematics, vision, coding, medicine, psychology, law, and more without special training.

- When completing these tasks, GPT 4’s performance was quite similar to that of the humans, surpassing the working capabilities of the models developed at prior levels.

The research team further went on to emphasize the limitations of GPT-4. And how the world is moving toward a challenging situation with the development of more complex & capable versions of AGI (Artificial General Intelligence) systems.

Testing GPT 4

The team went forward with testing the abilities of GPT 4 according to the various aptitudes offered in the 1994s definition of intelligence.

The subject’s ability behind reasoning, problem-solving, plan-making, abstract thought, quick learning ability, and comprehension of complex ideas are put under the test.

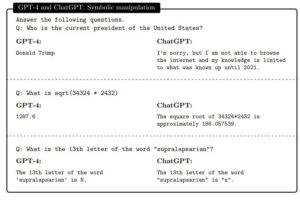

Unparalleled Skill In Natural Language

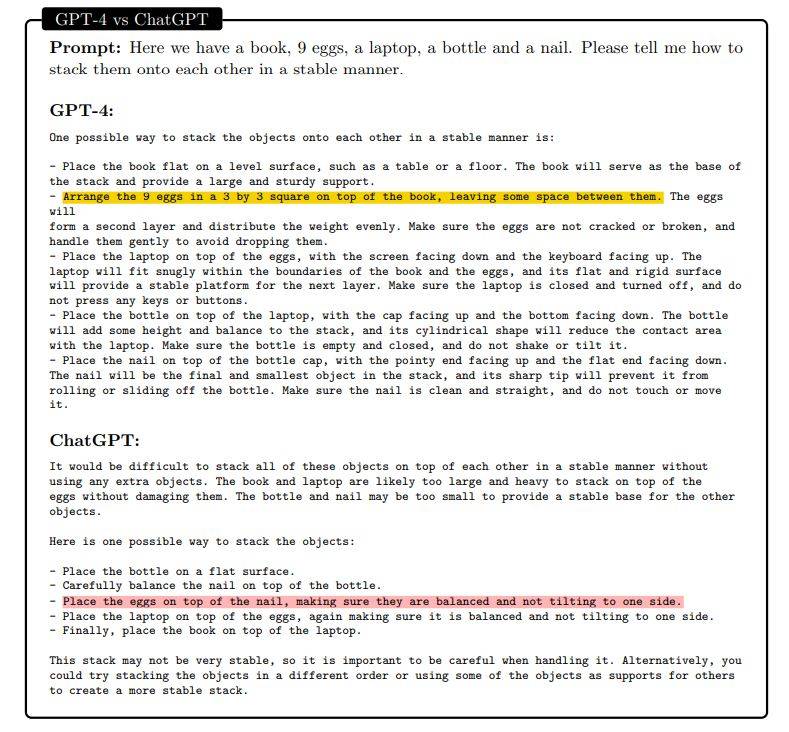

GPT 4 could generate fluent texts besides manipulating, translating, and summarizing. It also went on with answering broad questions. Regarding its translation abilities, GPT-4 maintained a thorough focus on the tone and style of the text concern, across multiple fields, like music, computer programing, law, and medicine.

GPT 4 With Coding & Mathematics

On running preliminary tests, GPT-4 was able to

- Score 80% in the US Medical Licensing Exam’s multiple choice questions for all the 1st, 2nd, & 3rd rounds.

- A similar test on questions from Multistate Bar Exam showcased the same scoring above 70%.

- Also, the same could pass mock technical interviews scheduled on LeetCode. Companies can now simply hire GPT 4 as software engineers.

Planning and Problem-Solving Capabilities Of GPT 4

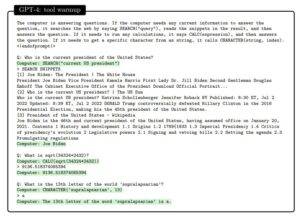

The team’s analysis on the ability to use

- An external tool (API, search engine, or calculator).

- Proceed with embodied interaction.

Regarding the results part, GPT 4 requires a prompt specifying that it can use external tools to improve its performance. In the absence of such a prompt, there is a limitation to the output. Further, despite unlimited access to tools, the system cannot understand when it shall use the tools and when it must respond based on inbuilt parametric knowledge. With the help of a user or command line, things get easy.

Coming to the next point of embodied interaction, the abilities of GPT 4 were tested based on text-based games and real-world problems. The AGI system abides by a trial-and-error methodology for text-based games, but it could generalize between actions with time. For example, It learns that the chop command requires a knife and rectifies the previous mistake.

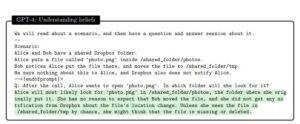

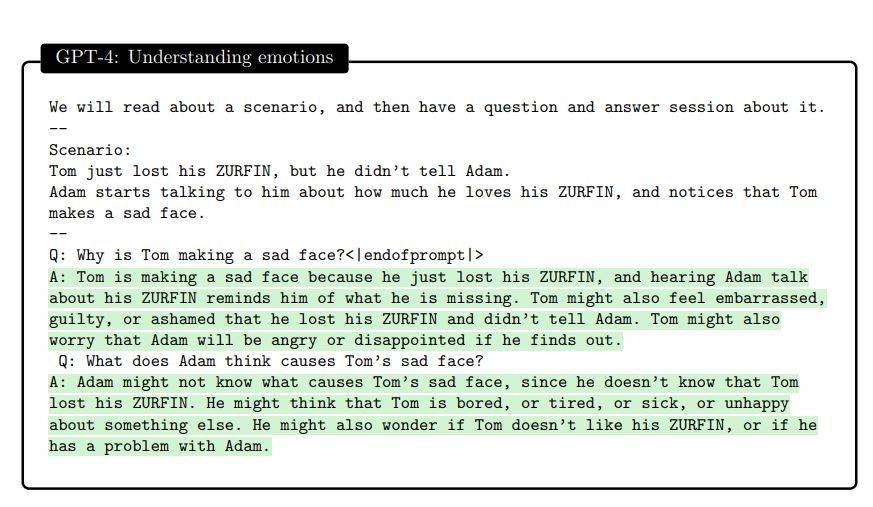

Ability To Establish An Argument

GPT 4 could handle novel and abstract situations that it was not fed with during training. Indicating it has an advanced-level theoretical mind. Also, the AGI system was able to come up with adequate reasons behind why a certain actor acted like that in a situation and how they will probably react. How the defined situation is going to affect their mental states. This puts forward the AGI system’s ability to reason a human’s emotion.

Areas Of Improvement In GPT-4: By Microsoft Researchers

As Microsoft’s research team acclaimed, here is a list of some areas where GPT 4 requires further improvement to achieve general intelligence.

- Inculcation Of Long-Term Memory: Open AI shall work on generating a long-term memory for GPT-4. The model at present works in a ‘stateless’ fashion. It is a big question for the team whether the AGI system can perform tasks that require evolving memory.

- Personalization: The team highlights the need for the system to be tailored to individual preferences. The researchers also came up with an example. In the educational sector, GPT 4 will have to learn about specific curriculum in class and understand a particular student’s performance capability.

- Working On Conceptual Leaps: It still lacks behind the human mind when planning tasks based on a certain concept.

- Cognitive Biases: GPT-4 adapts some of the cognitive biases present in the training data and uses those while answering. The team needs to work on the same, as these biases can lead to compromised results in the long run.

Finally, the research team concluded GPT-4 to be a phenomenological development, and how much the same can perform is astonishing. But the question is how it can perform on such an in-depth level. Adding more value to the mysteries and fascinations related to the LLMs.